WEB SCIENCE | DISTRIBUTED APPROACHES TO THE WEBTopics from the International Baccalaureate (IB) 2014 Computer Science Guide.

|

|

|

ON THIS PAGE

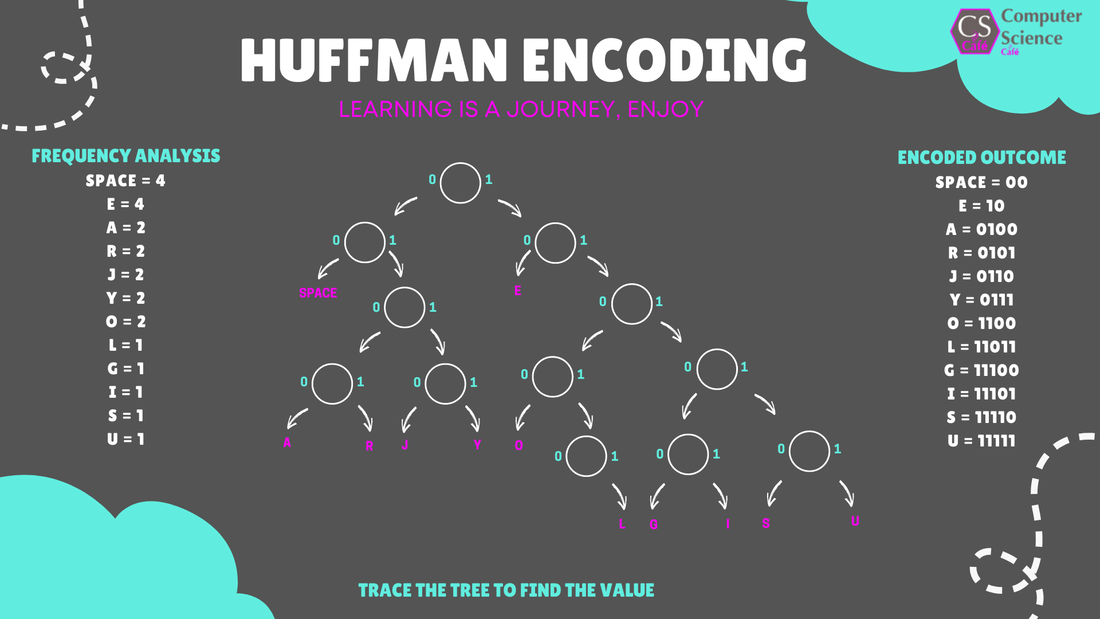

SECTION 1 | KEY TERMINOLOGY SECTION 2 | COMPARISION OF FEATURES SECTION 3 | INTEROPERABILITY AND OPEN STANDARDS SECTION 4 | HARDWARE USED BY DISTIBUTED NETWORKS SECTION 5 | GREATER DECENTRALISATION OF THE WEB SECTION 6 | LOSSY AND LOSSLESS COMPRESSION SECTION 7 | DECOMPRESSION SOFTWARE |